How Does Microsoft Copilot Access, Use, and Protect Your Data

What does it mean when a new feature is slipped into the familiar applications we’ve been using for years? In this case, it’s our go-to Microsoft Office suite with Copilot added to the toolkit. I had pretty much ignored Copilot until shadow AI became a topic that I knew deserved attention.

What exactly is Copilot and why do we care about it?

When you get past the warm, fuzzy marketing promises like “your everyday AI companion”, Microsoft’s Terms of Use don’t disappoint. They’re a masterclass in skillful obfuscation.

Since most of us don’t speak Microsoft, I took a trip down the Copilot rabbit hole to understand how our data is being used, where the guardrails are, and what lifechanging behaviors Copilot delivers to us.

First, let’s start by answering this simple question -- what is Copilot?

It’s an AI tool like ChatGPT, Gemini, and Claude designed to improve your productivity and efficiency. It uses the same underlying OpenAI model as ChatGPT. Microsoft rolled Copilot out in February 2023 to replace Cortana (did anyone ever use this?).

Uncomplicating the 3 Copilot Options

As with all things Microsoft, sorting out features and services is a frustrating exercise. For simplicity, we’ll stick with these three options (as of June 25, 2024). Bear with me for a minute. Buried in the Terms of Use are data protection rules that make it important to understand the differences among these plans.

1. Copilot for Windows. This free version is bundled with Windows 11 and some versions of Windows 10. Windows users don’t explicitly sign up for this service, and it is enabled by default. For companies without watchful IT leadership, the door has just been propped open for misguided shadow AI use. More in a minute.

2. Copilot Pro. This monthly subscription includes the ability to use Copilot with Office applications like Word and Excel. It is intended for individual users, not businesses. This is an important distinction for data protection that we’ll talk about in a minute.

3. Copilot for 365. This is the more expensive of the two monthly subscriptions and is intended for businesses. It includes not only the ability to integrate with your Office 365 tool suite but also to access internal company data.

The Copilot Versions Access and Safeguard Your Data Differently

When you ask Free Copilot and Copilot Pro a question, they both do a search of Microsoft Bing’s (their answer to Google) searchable index. This means that all of the information used to answer your questions is web-based like ChatGPT and the others.

On the other hand, Copilot for 365 expands its search to include not only Bing’s searchable data but also your internal company documents. Think about that for a moment.

What if, for example, you’re drafting a client proposal in Word, and you want to include research and analysis that supports your offering. Instead of a tedious, time-consuming search for individual documents, your helpful Copilot companion does the heavy lifting for you in a fraction of the time.

It sounds like a powerful tool until you stop and think about the implications. What if some of this information is highly confidential, only available to a select group within the company?

This is where responsible company IT guardrails come into play.

Without getting too far into the weeds in this short post, we’ll just mention one important security concept – the principle of least privileges. It means that each user on the company's network is granted access only to the data, resources, applications, and functions necessary to do their tasks.

It’s easy to see why this fundamental IT security practice takes on a new level of visibility. A thorough review of every user on your network and their access privileges is an essential system admin responsibility before implementing Copilot for 365.

How Microsoft Says It Protects Your Data

This was the question that first sent me down the research rabbit hole. As we all continue to more closely safeguard our valuable personal, company, and client data, giving any helpful AI tool access to our information feels like a risk.

While there are a lot of finer points to consider, here are a few key takeaways from my (many, many) reads of Microsoft’s data protection promises. Every time I read these I still come away with one more “wait, what about” questions so this is only the tip of the trust iceberg.

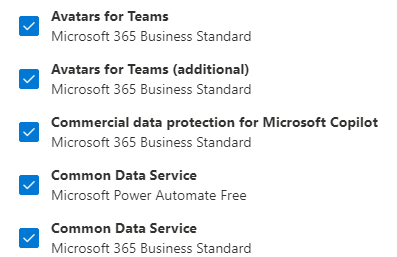

1. This brings us back to the three Copilot versions. At a high level, Microsoft provides commercial data protection under these conditions:

2. If you are using a personal, family, or individual 365 account, commercial data protection does not apply.

3. Microsoft does not use any of the data you enter into the Copilot prompt to train its Large Language Model.

They do not store any of your prompt data. Once your chat window is closed or your browser session ends, your data is gone. However, this is one of those sentences that caused me to say, “Uh, wait a minute.” –

“When commercial data protection is enabled, Microsoft doesn't retain this data beyond a short caching period for runtime purposes. After the browser is closed, the chat topic is reset, or the session times out, Microsoft discards all prompts and responses.”

“When commercial data protection is enabled…” suggests that a user’s data is up for grabs when this protection is turned off. My advice is to err on the side of caution and proceed carefully with every piece of data you feed to Copilot and any other AI tool.

4. As with all good things, there is always a warning label on the package.

In this case, the caution is no different than ChatGPT and the rest. There is nothing to prevent a user from including proprietary company data into the Copilot prompt box.

Even though this confidential data is not stored or used by Microsoft for training purposes, there is nothing to prevent this data from being included in the answer that Copilot delivers.

A user can easily share this answer, effectively sending company-only data outside your sacred boundaries. It is for this simple reason that continuous user training, awareness of changing features, and responsible guardrails are critical for every company.

Saying It In One Sentence

Taking the time to carefully read and consider the risks and rewards before adopting any new feature should be on every responsible leader's to-do list.

When You're Ready to Go Down the Copilot Terms of Use and Privacy Policy Rabbit Hole Yourself

As with all policies, these are likely to change so checking them often is part of responsible data management. These were the versions used on June 25, 2024 for this post.

https://learn.microsoft.com/en-us/copilot/microsoft-365/microsoft-365-copilot-privacy

https://learn.microsoft.com/en-us/copilot/privacy-and-protections

https://learn.microsoft.com/en-us/copilot/microsoft-365/microsoft-365-copilot-requirements

Discover Practical Knowledge Sharing for Business & Technology Leaders

If you've ever searched for a place to connect with business leaders without the ads, sales pitches, and usual social media clutter, you know how hard that can be.

That's why we created Studio CXO. We're business leaders like you who know there can be a better way.

Explore Studio CXO Now

Free Online Cybersecurity Risk Appetite Assessment

Start with these 20 no-wrong-answer questions to guide your cybersecurity planning.

Then get your free ebook After the Risk Assessment Next Steps

Free Online Cybersecurity Risk Tolerance Assessment

Discovering how much risk you're comfortable taking is smart strategic thinking.

Then receive your free ebook After the Risk Assessment Next Steps

Tags: Data Security

. . .

Linda Rolf is a lifelong curious learner who believes a knowledge-first approach builds valuable client relationships. She is fueled by discovering the unexpected connections among technology, data, information, people and process. For more than four decades, Linda and Quest Technology Group have been their clients' trusted advisor and strategic partner.

Linda Rolf is a lifelong curious learner who believes a knowledge-first approach builds valuable client relationships. She is fueled by discovering the unexpected connections among technology, data, information, people and process. For more than four decades, Linda and Quest Technology Group have been their clients' trusted advisor and strategic partner. Linda believes that lasting value and trust are created through continuously listening, sharing knowledge freely, and delivering more than their clients even know they need. As the CIO of their first startup client said, "The value that Quest brings to Cotton States is far greater than the software they develop."