How Do ChatGPT Connectors Use Your Third Party App Data

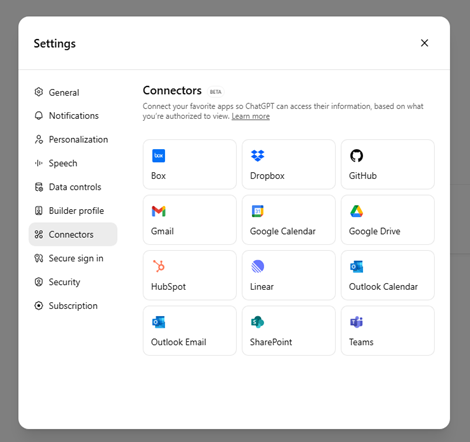

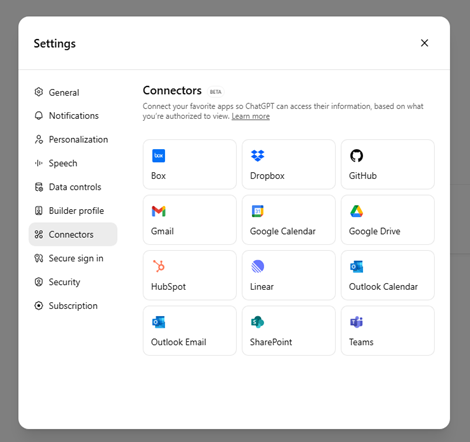

ChatGPT announced its new Connectors feature, hyped as yet another done-for-you efficiency. Users give ChatGPT access to their files in one of the supported apps, and all the information is readily accessible in every search. Relevance and context are more aligned because of these personalized documents and your search history. But before you click, there are some real risks that need to be carefully considered.

The best way to understand what's really happening under the covers is to read OpenAI's documentation on this handy feature. So that's where I headed.

It starts at the top. This highlighted Note at the top of the doc draws a clear us vs. them line in the sand. "Please note that connected apps are third-party services and subject to their own terms and conditions." It's a clear user beware warning. You grant ChatGPT access to your files, but what happens next is between you and the third-party provider. Ultimately, we, the trusting users, are responsible for whatever becomes of our data.

Different plans are managed differently. If you or your team have the Free, Plus, or Pro plan, then OpenAI "might" (their word) use your data to train its models. To safeguard your data, it's important that you disable "Improve the model for everyone" in your settings. We can only assume that this is enough to keep our information out of the training datasets.

ChatGPT Team, Enterprise, and Edu customers are covered by the Enterprise Policy where their data is not used for training purposes.

There are no admin controls for the Free, Pro, and Plus plans. That means that data access is all or nothing. There are no granular file permissions and user roles available to safeguard your data. All information is fair game.

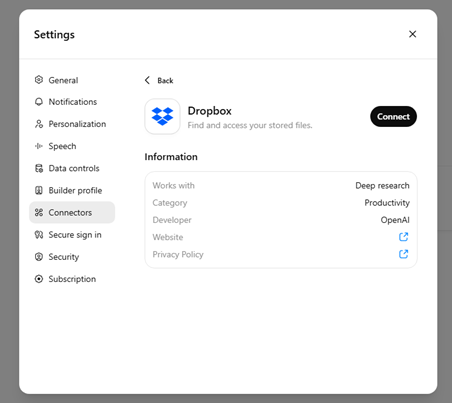

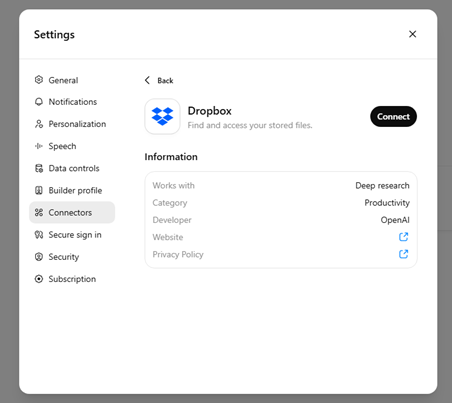

The scope is unclear. What they don't address and we can only speculate about is how far their search reach extends. For example, what if you have a Word doc that includes links to sensitive information that another user has shared with you through a Dropbox link? Who now has access to this shared link's files? Search results include links to the relevant files found. What does that really mean?

The documentation is incomplete about Connector access to Outlook Calendar, Email, and SharePoint. For Team, Enterprise, and Edu subscriptions, the Connector requires administrators to grant user access to these apps. However, there is no mention of any admin controls, permissions, or restrictions for the Free, Pro, and Plus plans. The potential risks with users granting access to these Microsoft services is a concern that needs to be considered and clearly addressed in your AI Acceptable Use Policy.

It's time for AI governance. A company owner once walked us through his company's shared Dropbox account. It was their clumsy, cheap solution to company-wide document sharing and offsite backups. Everyone had access to this Dropbox account with files continuously added, edited, and shared. Needless to say, it was a maintenance nightmare that created more problems than it solved.

As the owner was scrolling through the folders, the filename "passwords" jumped out. When we asked him what this file was (thinking that surely it couldn't be that obvious), he sheepishly admitted that it, in fact, was the keys to the kingdom documentation.

Imagine an employee connecting his or her unapproved free ChatGPT account to the company's Dropbox account. These are the samll but potentially harmful practices that can be the first steps toward responsible governance.

The Shared Responsibility Model revisited. We know from reading the Terms and Conditions of third-party providers like Dropbox and Google Drive that they don't scan for viruses and malware on file uploads. What if an employee -- intentionally or otherwise -- uploads a piece of malware to your company's Google Drive account. What happens when ChatGPT is granted access to this Google Drive account and the malware link is included in an AI search result?

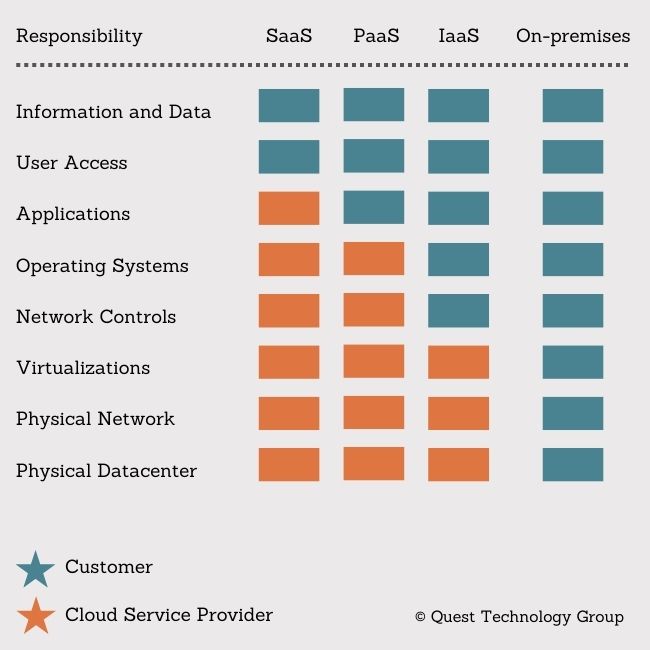

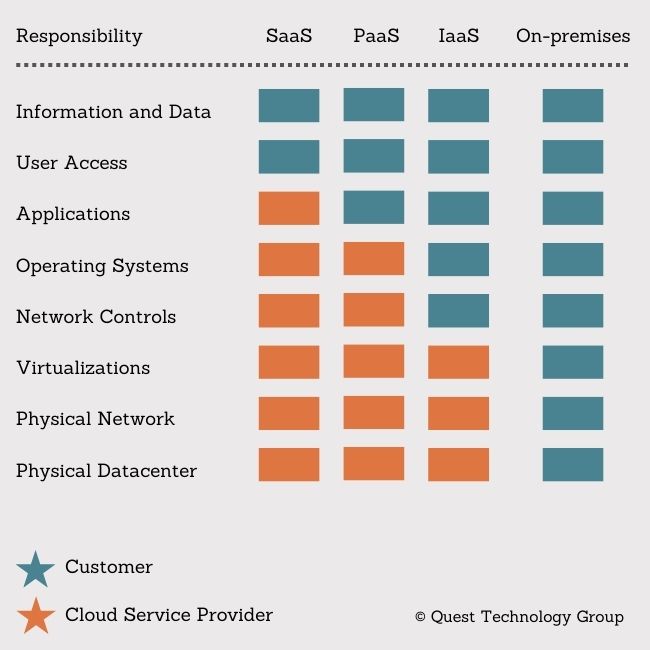

Each of the three cloud categories carries with it a shared set of responsibilities between the customer (you) and the cloud service provider. The diagram below is a summary of who does what.

What is immediately apparent is that you are responsible for protecting your data, the information you freely share with the cloud application, and managing secure user access.

These are hefty responsibilities.

A newly-discovered attack in Microsoft 365 Copilot called EchoLeak is the first known zero-click vulnerability targeting AI systems. Zero-click refers to a search result that is delivered without requiring the user to click a link to view the content. For example, you've seen this kind of search result as a Google featured snippet.

Microsoft 365 Copilot is an AI assistant integrated into Microsoft's suite of office tools that includes Outlook, Word, Excel, and Teams. Reading how Microsoft Copilot safeguards your data is a masterclass in obfuscation. This leak was discovered in January 2025 and a vulnerability patch was released in May.

While Microsoft found no evidence of any customer data exploitation, the implications of this first AI attack are significant and predictive of the risks to come. It highlights a new AI risk exposure known as "LLM Scope Violations" where a large language model (LLM) like ChatGPT accesses and leaks sensitive or private information without user permission or action. It's not a mental stretch to imagine how blindly allowing connections among disparate applications will create new risk opportunities.

Convenience features like Connectors will continue to proliferate. Users who value productivity, efficiency, and doing more with less effort will naturally gravitate to these add-ons. New AI exploits like EchoLeak are just the tip of the iceberg. The lure of helpful tools combined with hands-free leaks create the perfect storm for data exploitation at scale without human intervention.

It's imperative that companies adopt and actively enforce responsible guardrails around the use of AI tools in the workplace. AI isn't going away, and banning it is a fool's errand. Company leaders should start with a commitment to AI governance. This isn't a heavy-handed set of policies and procedures that no one reads. Instead, it's a collaborative, continually evolving framework that balances practical AI knowledge with policies aligned with each company's unique goals.

Are you ready to create your AI governance team?

How Do These ChatGPT Connectors Use Your Data?

The best way to understand what's really happening under the covers is to read OpenAI's documentation on this handy feature. So that's where I headed.

It starts at the top. This highlighted Note at the top of the doc draws a clear us vs. them line in the sand. "Please note that connected apps are third-party services and subject to their own terms and conditions." It's a clear user beware warning. You grant ChatGPT access to your files, but what happens next is between you and the third-party provider. Ultimately, we, the trusting users, are responsible for whatever becomes of our data.

Different plans are managed differently. If you or your team have the Free, Plus, or Pro plan, then OpenAI "might" (their word) use your data to train its models. To safeguard your data, it's important that you disable "Improve the model for everyone" in your settings. We can only assume that this is enough to keep our information out of the training datasets.

ChatGPT Team, Enterprise, and Edu customers are covered by the Enterprise Policy where their data is not used for training purposes.

There are no admin controls for the Free, Pro, and Plus plans. That means that data access is all or nothing. There are no granular file permissions and user roles available to safeguard your data. All information is fair game.

The scope is unclear. What they don't address and we can only speculate about is how far their search reach extends. For example, what if you have a Word doc that includes links to sensitive information that another user has shared with you through a Dropbox link? Who now has access to this shared link's files? Search results include links to the relevant files found. What does that really mean?

The documentation is incomplete about Connector access to Outlook Calendar, Email, and SharePoint. For Team, Enterprise, and Edu subscriptions, the Connector requires administrators to grant user access to these apps. However, there is no mention of any admin controls, permissions, or restrictions for the Free, Pro, and Plus plans. The potential risks with users granting access to these Microsoft services is a concern that needs to be considered and clearly addressed in your AI Acceptable Use Policy.

Real Life Examples of Data Risks and Remedies

It's time for AI governance. A company owner once walked us through his company's shared Dropbox account. It was their clumsy, cheap solution to company-wide document sharing and offsite backups. Everyone had access to this Dropbox account with files continuously added, edited, and shared. Needless to say, it was a maintenance nightmare that created more problems than it solved.

As the owner was scrolling through the folders, the filename "passwords" jumped out. When we asked him what this file was (thinking that surely it couldn't be that obvious), he sheepishly admitted that it, in fact, was the keys to the kingdom documentation.

Imagine an employee connecting his or her unapproved free ChatGPT account to the company's Dropbox account. These are the samll but potentially harmful practices that can be the first steps toward responsible governance.

The Shared Responsibility Model revisited. We know from reading the Terms and Conditions of third-party providers like Dropbox and Google Drive that they don't scan for viruses and malware on file uploads. What if an employee -- intentionally or otherwise -- uploads a piece of malware to your company's Google Drive account. What happens when ChatGPT is granted access to this Google Drive account and the malware link is included in an AI search result?

Each of the three cloud categories carries with it a shared set of responsibilities between the customer (you) and the cloud service provider. The diagram below is a summary of who does what.

What is immediately apparent is that you are responsible for protecting your data, the information you freely share with the cloud application, and managing secure user access.

These are hefty responsibilities.

Microsoft Copilot is an Example of Unintended Data Leaks

A newly-discovered attack in Microsoft 365 Copilot called EchoLeak is the first known zero-click vulnerability targeting AI systems. Zero-click refers to a search result that is delivered without requiring the user to click a link to view the content. For example, you've seen this kind of search result as a Google featured snippet.

Microsoft 365 Copilot is an AI assistant integrated into Microsoft's suite of office tools that includes Outlook, Word, Excel, and Teams. Reading how Microsoft Copilot safeguards your data is a masterclass in obfuscation. This leak was discovered in January 2025 and a vulnerability patch was released in May.

While Microsoft found no evidence of any customer data exploitation, the implications of this first AI attack are significant and predictive of the risks to come. It highlights a new AI risk exposure known as "LLM Scope Violations" where a large language model (LLM) like ChatGPT accesses and leaks sensitive or private information without user permission or action. It's not a mental stretch to imagine how blindly allowing connections among disparate applications will create new risk opportunities.

The Bottom Line

Convenience features like Connectors will continue to proliferate. Users who value productivity, efficiency, and doing more with less effort will naturally gravitate to these add-ons. New AI exploits like EchoLeak are just the tip of the iceberg. The lure of helpful tools combined with hands-free leaks create the perfect storm for data exploitation at scale without human intervention.

It's imperative that companies adopt and actively enforce responsible guardrails around the use of AI tools in the workplace. AI isn't going away, and banning it is a fool's errand. Company leaders should start with a commitment to AI governance. This isn't a heavy-handed set of policies and procedures that no one reads. Instead, it's a collaborative, continually evolving framework that balances practical AI knowledge with policies aligned with each company's unique goals.

Are you ready to create your AI governance team?

Discover Practical Knowledge Sharing for Business & Technology Leaders

If you've ever searched for a place to connect with business leaders without the ads, sales pitches, and usual social media clutter, you know how hard that can be.

That's why we created Studio CXO. We're business leaders like you who know there can be a better way.

Explore Studio CXO Now

Free Online Cybersecurity Risk Appetite Assessment

Start with these 20 no-wrong-answer questions to guide your cybersecurity planning.

Then get your free ebook After the Risk Assessment Next Steps

Free Online Cybersecurity Risk Tolerance Assessment

Discovering how much risk you're comfortable taking is smart strategic thinking.

Then receive your free ebook After the Risk Assessment Next Steps

Linda Rolf is a lifelong curious learner who believes a knowledge-first approach builds valuable, lasting client relationships.

Linda Rolf is a lifelong curious learner who believes a knowledge-first approach builds valuable, lasting client relationships. She loves discovering the unexpected connections among technology, data, information, people and process. For more than four decades, Linda and Quest Technology Group have been their clients' trusted advisor and strategic partner.

Tags: AI